Layered Cyber Security Services: Protect Your Data – Technologist

As artificial intelligence advances rapidly, organisations in all industries and of all sizes are beginning to encounter a critical challenge; AI. Below, we discuss why layered cyber security services will help companies to protect their assets and data.

How can AI’s transformative potential be harnessed while safeguarding sensitive data?

Neuways’ layered cyber security services are designed to address these concerns head-on.

Security and Privacy Challenges in AI

AI systems introduce unique privacy risks, mainly due to the nature of AI models. These models are “trained” on vast amounts of data, absorbing and retaining information—including potentially sensitive data if it’s fed in inadvertently. Imagine an employee inputting strategic documents, client information, or financial records into an AI tool: this data could end up in the AI’s training dataset, risking exposure if misused or breached.

Evolving AI Security Risks

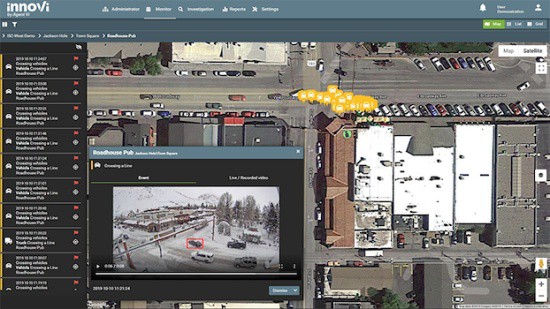

AI’s future in the workplace will be even more complex, with not just text-based data but also images, audio, and video being handled by AI. This adds to the overall attack surface, making layered cyber security services as defences crucial. As AI systems integrate into CRM tools, HR platforms, and beyond, the risks around data leakage, false outputs, and misuse by malicious actors will only grow.

Enforcing the Safe Use of AI

A clear, enforced policy is essential to help employees use AI tools responsibly. Neuways recommends strict guidelines on what data is permitted within AI systems, ideally excluding sensitive or personal information. Just as importantly, these policies should be backed by training to foster an understanding of AI risks, reinforcing that AI tools should be handled like any third-party service.

The Importance of Layered Cyber Security Defences and Services

Layered security offers a comprehensive approach, combining policies with technical defences. Implementing enterprise-grade AI solutions incorporating data encryption, access controls, and auditing ensures a safety net for accidental and malicious data exposures. With these defences, organisations can better protect themselves against the risks introduced by AI technology.

Enhancing Security in AI Design

A shift toward “privacy by design” in AI tools would relieve some current burden on organisations. Federated learning, where models are trained locally without transferring sensitive data, is another step in the right direction. This approach keeps sensitive data within the organisation’s control, adding an extra layer of protection.

Transparency and Accountability from AI Vendors

It’s also essential for AI vendors to enhance transparency, providing organisations with tools to audit what data AI systems ingest, flag and delete inappropriate data, and comply with data protection principles. Introducing GDPR-style data subject rights for AI could offer an essential framework for keeping sensitive data safe.

To protect your business’s data in an AI-driven world, consider Neuways’ layered approach to cyber security. For more insights and updates on cyber security in AI, subscribe to our weekly Friday Digest.